The Following are some guidelines to implement NIC Teaming in Xen Cloud Platform.

Achieving more speed by grouping multiple physical resources

Failover with load balancing

Balancing traffic according to their functionalities, For example Storage traffic can be mapped to NIC x, so that all storage traffic go through only that port.

Types of Interfaces

Primary management interfaces. You can bond a primary management interface to another NIC so that the second NIC provides failover for management traffic. However, NIC bonding does not provide load balancing for management traffic.

NICs (non-management). You can bond NICs XenServer is using solely for VM traffic together. Bonding these NICs not only provides resiliency, but it also balances the traffic from multiple VMs between the NICs.

Other management interfaces. You can bond NICs that you have configured as management interfaces (for example, for storage). However, for most iSCSI software initiator storage, Citrix recommends configuring multipathing instead of NIC bonding since bonding management interfaces only provides failover without load balancing.

The illustration that follows shows the differences between the three different types of interfaces that you can bond.

This illustration shows how the links that are active in bonds vary according to traffic type. In the top picture of a management network, NIC 1 is active and NIC 2 is passive. For the VM traffic, both NICs in the bond are active. For the storage traffic, only NIC 3 is active and NIC 4 is passive.

Selecting a Type of NIC Bonding

When you configure XenServer to route VM traffic over bonded NICs, by default, XenServer balances the load between the two NICs. However, XenServer does not require you to configure

NIC bonds with load balancing (active-active). You can configure either:

Active-active bonding mode.

XenServer sends network traffic over both NICs in a loadbalanced manner. Active-active bonding mode is the default bonding mode and without any additional configuration it is the one XenServer uses.

Active-passive bonding mode.

XenServer only sends traffic over one NIC in the bonded pair. If that NIC loses connectivity, the traffic fails over to the NIC that is not being used. The best mode for your environment varies according to your environment’s goals, budget, and switch performance. The sections for each mode discuss these considerations. Note: Citrix strongly recommends bonding the primary management interface if the XenServer High Availability feature is enabled as well as configuring multipathing or NIC bonding for the heartbeat SR.

1) Understanding Active-Active NIC Bonding

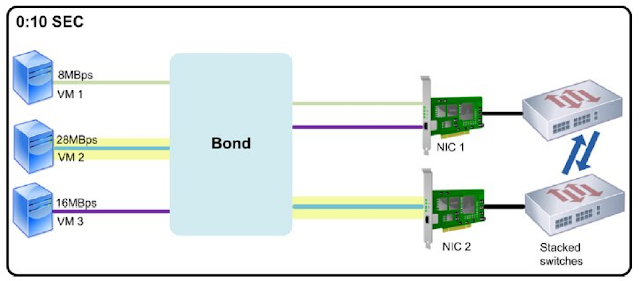

When you bond NICs used for guest traffic in the default active-active mode, XenServer sends network traffic over both NICs in the bonded pair to ensure that it does not overload any one NIC with traffic. XenServer does this by tracking the quantity of data sent from each VM’s virtual interfaces and rebalancing the data streams every 10 seconds. For example, if three virtual interfaces (A, B, C) are sending traffic to one bond and one virtual interface (Virtual Interface B) sends more VM guest traffic than the other two, XenServer balances the load by sending traffic from Virtual Interface B to one NIC and sending traffic from the other two interfaces to the other NIC.

Important: When creating bonds, always wait until the bond is finished being created before performing any other tasks on the pool. To determine if XenServer has finished creating the bond, check the XenCenter logs. The series of illustrations that follow show how XenServer redistributes VM traffic according to load every ten seconds.

In this illustration, VM 3 is sending the most data (30 megabytes per second) across the network, so XenServer sends

its traffic across NIC 2. VM 1 and VM 2 have the lowest amounts of data, so XenServer sends their traffic over NIC 1. The next illustration shows how XenServer reevaluates the load across the bonded pair after ten seconds.

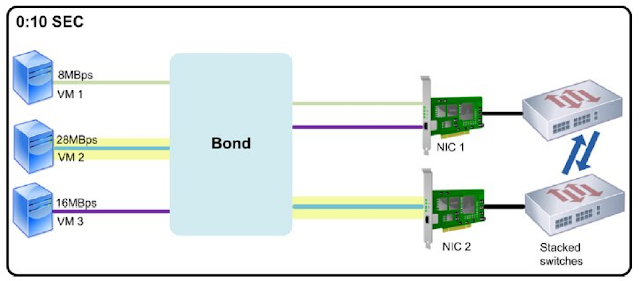

This illustration shows how after ten seconds, XenServer reevaluates the amount of traffic the VMs are sending. When it discovers that VM 2 is now sending the most traffic, XenServer redirects VM 2’s traffic to NIC 2 and sends VM 3’s traffic across NIC 1 instead. XenServer continues to evaluate traffic every ten seconds, so it is possible that the VM sending traffic across NIC 2 in the illustrations could change again at the twenty second interval. Traffic from a single virtual interface is never split between two NICs. SLB is based on the open-source Linux Adaptive Load Balancing (ALB) mode. Because SLB bonding is an active-active mode configuration, XenServer routes traffic over both NICs simultaneously. XenServer does not load balance management and IP-based storage traffic. For these traffic types, configuring NIC bonding only provides failover even when the bond is in active-active mode.

2) Understanding Active-Passive NIC Bonding

XenServer supports running NIC bonds in an active-passive configuration. This means that XenServer routes traffic across one NIC in the bond: this is the only active NIC. XenServer does not send traffic over the other NIC in the bond so that NIC is passive, waiting for XenServer to redirect traffic to it if the active NIC fails. To configure XenServer to route traffic on a bond in active-passive, you must use the CLI to set a parameter on the master bond PIF (other-config:bond-mode=active-backup), as described in the XenServer Administrator’s Guide.

When designing any network configuration, it is best to strive for simplicity by reducing components and features to the minimum required to meet your business goals. Based on this principle, consider configuring active-passive NIC bonding in situations such as the following: When you are connecting one NIC to a switch that does not work well with active-active bonding. For example, if the switch does not work well with active-active bonding, you might see symptoms like packet loss, an incorrect ARP table on the switch, the switch would not update the ARP table correctly, and/or the switch would have incorrect settings on the ports (you might configure aggregation for the ports and it would not work). When you do not need load balancing or when you only intend to send traffic on one NIC. For example, if the redundant path uses a cheaper technology (for example, a lowerperforming switch or external up-link) and that results in slower performance, configure active-passive bonding instead.

Bonding Management Interfaces and MAC Addressing

Because bonds function as one logical unit, both NICs, regardless of whether the bond is activeactive or active-passive, only have one MAC address between the two of them. That is, unless otherwise specified, the bonded pair uses the MAC address of the first NIC in the bond. You can determine the first NIC in the bond as follows: In XenCenter, the first NIC in the bond is the NIC assigned the lowest number. For example, for a bonded NIC named “Bond 2+3,” the first NIC in the bond is NIC 2. When creating a bond using the xe bond-create command, the first PIF listed in the pif-uuids parameter is the first NIC in the bond. When creating a bond, make sure that the IP address of the management interface before and after creating the bond is the same. If using DHCP, make sure that the MAC address of the management interface before creating the bond (that is, the address of one of the two NICs) is the same as the MAC of the bond after it is created.

CONFIGURATION

1) Using XenCenter

1. Ensure that the NICs you want to bind together (the bond slaves) are not in use: you must shut down any VMs with virtual network interfaces using the bond slaves prior to creating the bond. After you have created the bond, you will need to reconnect the virtual network interfaces to an appropriate network.

2. Select the server in the Resources pane then click on the NICs tab and click Create Bond.

3. Select the NICs you want to bond together. To select a NIC, select its check box in the list. Only 2 NICs may be selected in this list. Clear the check box to deselect a NIC.

4. Under Bond mode, choose the type of bond: Select Active-active to configure an active-active bond, where traffic is balanced between the two bonded NICs and if one NIC within the bond fails, the host server's network traffic automatically routes over the second NIC. Select Active-passive to configure an active-passive bond, where traffic passes over only one of the bonded NICs. In this mode, the second NIC will only become active if the active NIC fails, for example, if it loses network connectivity.

5. To use jumbo frames, set the Maximum Transmission Unit (MTU) to a value between 1500 to 9216.

6. To have the new bonded network automatically added to any new VMs created using the New VM wizard, select the check box.

7. Click Create to create the NIC bond and close the dialog box. XenCenter will automatically move management interfaces (primary and secondary) from bond slaves to the bond master when the new bond is created.

2) Using Xen Commands

The following commands are used to team (or bond) two NICs into a single interface for network redundancy purposes.

* Create a new pool-wide (virtual) network for use with the bonded NICs:

xe network-create name-label=[network-name]

Which uses the following additional syntax: network-name: name for the (virtual) network that is newly created.

This command returns the uuid of the newly created network. Make sure that you write it down for further reference.

* Create a new bond for this network:

xe bond-create network-uuid=[uuid-network] pif-uuids=[uuid-pif-1],[uuid-pif-2]

Which uses the following additional syntax: uuid-network: unique identifier of the network. uuid-pif-1: unique identifier of the 1st physical interface that is included in the bond. uuid-pif-2: unique identifier of the 2nd physical interface that is included in the bond.

This command returns the uuid of the newly created bond. Make sure that you write it down for further reference.

* Retrieve the unique identifier of the bond:

xe pif-list network-uuid=[uuid-network]

Which uses the following additional syntax: uuid-network: unique identifier of the network.

* Config the bond as an active/passive bond:

xe pif-param-set uuid=[uuid-bond-pif] other-config:bond-mode=active-backup

Which uses the following additional syntax: uuid-bond-pif: unique identifier of the bond. Bond-mode: Has multiple values. We can use according to our needs (Refer types of bond for more information)

*Config the bond as an active/active bond:

xe pif-param-set uuid=[uuid-bond-pif] other-config:bond-mode=balance-slb

Which uses the following additional syntax: uuid-bond-pif: unique identifier of the bond.

3) LACP

LACP bond can be created in dom0 command line as follows.

xe bond-create mode=lacp network-uuid=<network-uuid> pif-uuids=<pif-uuids>

Hashing algorithm can be specified at the creation time (default is tcpudp_ports):

xe bond-create mode=lacp properties:hashing-algorithm=<halg> network- uuid=<network-uuid> pif-uuids=<pif-uuids>

where <halg> is src_mac or tcpudp_ports. We can also change the hashing algorithm for an existing bond, as shown below.

xe bond-param-set uuid=<bond-uuid> properties:hashing_algorithm=<halg>

It is possible to customize the rebalancing interval by changing the bond PIF parameter other- config:bond-rebalance-interval and then re-plugging the PIF. The value should be expressed in millisecond. For example, following commands change the rebalancing interval to 30 seconds.

xe pif-param-set other-config:bond-rebalance-interval=30000 uuid=<pif-uuid> xe pif-plug uuid=<pif-uuid>

The two LACP bond modes will not be displayed if you use a version older than XenCenter 6.1 or if you use Linux Bridge network stack.

Configuring LACP on a switch

Contrary to other supported bonding modes, LACP requires set-up on the switch side. The switch must support IEEE standard 802.3ad. As is the case for other bonding modes, the best practice remains to connect the NICs to different switches, in order to provide better redundancy. There is no Hardware Compatibility List (HCL) of switches. IEEE 802.3ad is widely recognized and applied, so any switch with LACP support, as long as it observes this standard, should work with XenServer LACP bonds.

Steps for configuring LACP on a switch

Identify switch ports connected to the NICs to bond. Using the switch web interface or command line interface, set up the same LAG (Link Aggregation Group) number for all the ports to be bonded. For all the ports to be bonded set LACP to active (for example “LACP ON” or “mode auto”). If necessary, bring up the LAG/port-channel interface. If required, configure VLAN settings for the LAG interface — just as it would be done for a standalone port. Example: Cisco Catalyst 3750G-A8 Configuration of LACP on ports 23 and 24 on the switch:

C3750-1#configure terminal

Enter configuration commands, one per line. End with CNTL/Z.

C3750-1(config)#interface Port-channel3

C3750-1(config-if)#switchport trunk encapsulation dot1q

C3750-1(config-if)#switchport mode trunk

C3750-1(config-if)#exit

C3750-1(config)#interface GigabitEthernet1/0/23

C3750-1(config-if)#switchport mode trunk

C3750-1(config-if)#switchport trunk encapsulation dot1q

C3750-1(config-if)#channel-protocol lacp

C3750-1(config-if)#channel-group 3 mode active

C3750-1(config-if)#exit

C3750-1(config)#interface GigabitEthernet1/0/24

C3750-1(config-if)#switchport mode trunk

C3750-1(config-if)#switchport trunk encapsulation dot1q

C3750-1(config-if)#channel-protocol lacp

C3750-1(config-if)#channel-group 3 mode active

C3750-1(config-if)#exit

C3750-1(config)#exit

4) Diagnostics

Logs play an important role in troubleshooting. The following are some of the log files which might be useful in NIC teaming setup.

File /var/log/messages

File /var/log/xensource.log

File /var/log/xensource.log contains useful network daemon entries.